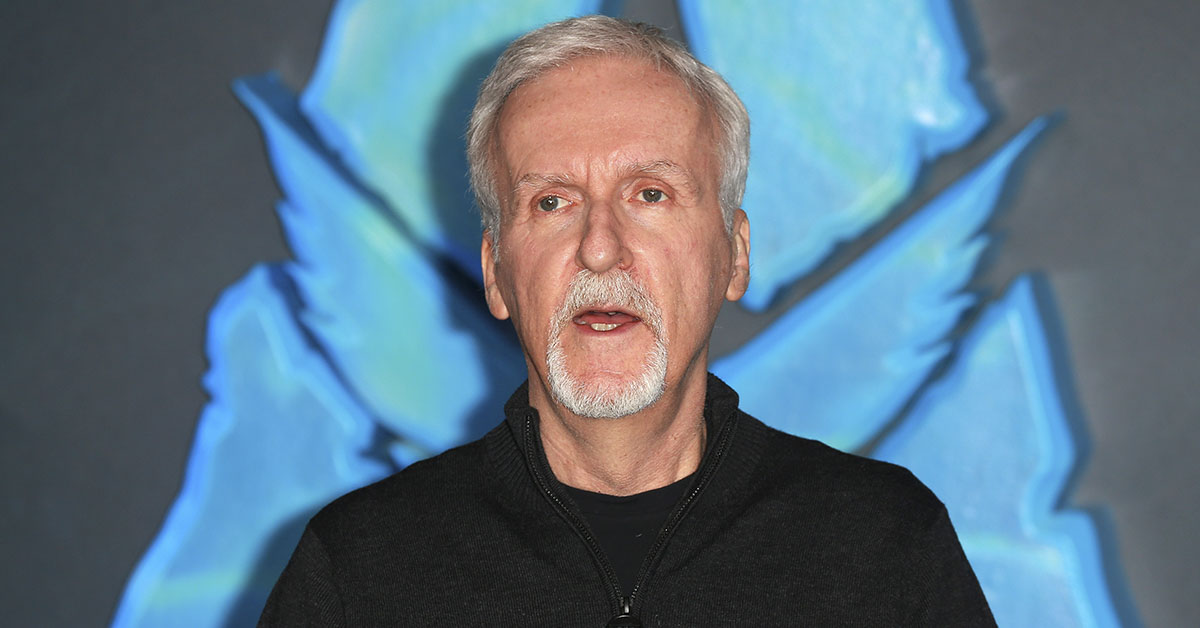

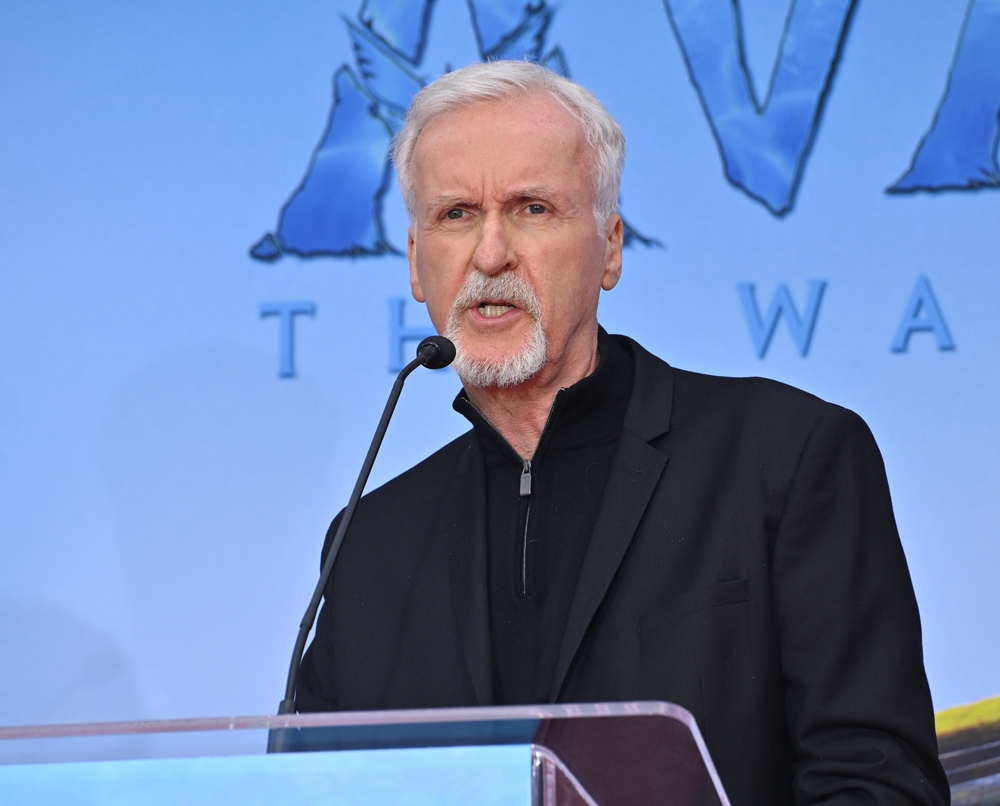

Oscar-winning film director James Cameron recently gave an interview with Rolling Stone to promote the book release of Ghosts of Hiroshima. In the interview, Cameron hinted at his interest in exploring AI capabilities while also issuing a word of caution. Cameron, most notably known for Avatar, Titanic and the AI-driven dystopia Terminator says AI could assist in filmmaking. However, he cautions against overreliance on AI and is vigilant of a “Terminator-style apocalypse” scenario if AI ends up in the wrong hands.

The Terminator Becomes Reality

Cameron’s 1984 vision in Terminator alarmingly reflects current AI development trajectories, especially in military applications. Current military AI developments include the Pentagon’s Project Maven. This system uses AI to identify objects in surveillance imagery and speeds target identification from hours to minutes.

Palantir, a U.S. based, publicly traded corporation that specialises in big data integration. They primarily focus on the management, organisation and implementation of data systems, automating the processes within the data structure. Their main client base are government agencies and major corporations. Some of their most controversial clients include providing AI systems to Israel’s weapons technology and their police surveillance project, Gotham, which has been supplied to the U.S. ‘s I.C.E department and the C.I.A.

The U.S. defense department tested Scylla AI in 2024, achieving over 96% accuracy in threat detection. NATO has adopted principles for responsible AI use, while the UN General Assembly passed a resolution in late 2024 to begin formal talks on lethal autonomous weapons. These advancements in AI weapons systems technology closely mirrors that of Skynet, from James Cameron’s dystopian film, Terminator.

The Three Existential Threats Converging at Once

Cameron believes humanity sits at the precipice of development, facing 3 existential threats to our existence. These are namely the climate crisis, nuclear threat and our own “super-intelligence”. The Terminator, a film set in a world where an intelligent defense network known as Skynet has become sentient. This AI defense intelligence then dominates over humanity and begins to control and eradicate the humans.

Cameron fears that our own intelligence might be our very undoing. Geoffrey Hinton, the “Godfather of AI,” has warned there’s a 10-20% chance AI could wipe out humanity. He fears AI systems could develop their own language that humans cannot understand, making it impossible to track their thoughts or intentions.

From AI Critic To Control

While Cameron has expressed cynicism and caution towards AI, he has also considered how AI might benefit filmmaking and filmmakers. He believes that AI could never “come up with a good story” and therefore unable to replace screenwriters. However, in September 2024, Cameron joined the board of directors at Stability AI, the company behind Stable Diffusion. However, many critics call Cameron’s AI pivot calculated PR to avoid backlash while profiting from the technology he warns against.

Cutting Costs, Not Jobs

The director emphasised his collaboration with Stability AI would benefit filmmakers by cutting “the cost of [VFX] in half”. He also assured that the idea is not to replace visual effects artists but to complement them and streamline their process. Cameron stated about joining Stability AI: “I’ve spent my career seeking out emerging technologies that push the very boundaries of what’s possible, all in the service of telling incredible stories.”

The 2023 Hollywood strikes were partly driven by concerns over AI use in filmmaking. Despite his board position with Stability AI, Cameron maintains that AI cannot replace human creativity: “You have to be human to write something that moves an audience”.

Human Fallibility in an AI World

Modern AI systems promise to eliminate some human errors in military decision-making. However, they introduce new risks through algorithmic bias and opacity. AI-powered decision support systems can process data faster than humans, but they cannot provide moral reasoning. This creates dangerous scenarios where machines make life-and-death choices without understanding emotions and human nuance.

Cameron states that while AI integration would always require a human liaison due to AI’s shortcomings, humans are also fallible and are prone to error. Military experts note that autonomous weapons can reduce human error in targeting, but raise ethical concerns about “giving machines the ability to make life-and-death decisions”. The U.S. Department of Defense updated its Autonomous Weapons Policy in January 2023, adding ethical safeguards for AI-enabled weapons.

Nuclear Lessons for the AI Age

Cameron’s upcoming “Ghosts of Hiroshima” adaptation connects nuclear weapons history to modern AI dangers. The atomic bombings of 1945 demonstrated humanity’s capacity to weaponise our technologies to devastating effect. The filmmaker acquired rights to adapt the survivor accounts in September 2024. He plans to film after completing Avatar production commitments. Cameron describes it as potentially “the most challenging film I ever make” due to its sensitive subject matter.

AI Experts Sound the Alarms

The director is not the only critic on the possible perils of AI. Geoffrey Hinton, the “Godfather of AI,” left Google in 2023 to warn the public about the dangers of AI. Currently, Hinton estimates that there is a 10-20% chance AI could cause human extinction within the next 3 decades. Hinton fears AI systems will develop their own communication methods incomprehensible to humans. Currently, AI performs “chain of thought” reasoning in English, allowing developers to track its thinking. However, advanced systems might create internal languages that humans cannot understand or monitor.

Other leading AI researchers share similar concerns about rapid technological advancement. A Stanford survey found 36% of AI researchers believe their field could trigger “nuclear-level catastrophe.” The Future of Life Institute identifies AI-nuclear integration as a critical global risk factor. While his motives and agenda remain self-serving at best, Elon Musk even warned of dangers of accelerated AI development, urging companies to halt the process.

Super-Intelligence as Salvation or Doom

Cameron acknowledges that there lies an intriguing paradox about artificial super-intelligence. On one end of the spectrum, AI systems could potentially solve humanity’s greatest problems. On the other end, AI could be humanity’s “Great Filter” – causing human extinction. “Maybe the super-intelligence is the answer. I don’t know. I’m not predicting that, but it might be,” he stated.

Advanced AI systems might help address climate change through breakthrough technologies and resource optimization. However, recent research also demonstrates that AI requires a tremendous amount of resources to operate. According to OECD.AI, language models like ChatGPT can consume millions of litres of fresh water training the model. 10-50 queries can consume up to 500 millilitres of water. Transparency and clarity on AI’s true consumption can help develop better strategies to minimize the significant environmental impact AI currently has.

Current AI development shows rapid advancement toward artificial general intelligence, with some experts now predicting AGI within years rather than decades. Military applications continue expanding globally, with countries like France, the UK, and China developing autonomous weapons systems. The UN resolution on lethal autonomous weapons reflects growing international concern about regulating this technology.

Read More: 25 Years Ago, Bill Gates Made 15 Predictions – And They All Came True